Cache sharing is a feature that allows sharing the cache entries between Guardian servers automatically or on demand.

Cache sharing benefits:

1. Improving performance; higher cache hit rate.

2. Better user experience; very low latency.

3. Enhanced security; limited risk of cache poisoning.

Cache sharing mechanisms:

Automatic: any entry added or updated in the cache will be pushed to the other listening Guardian servers via 'multicast'.

On-demand: the entire cache is copied to the targeted Guardian server via a CLI command. The transfer will be unicast.

How to configure:

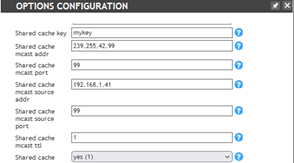

From the GUI open the options of the smart or the Guardian server and add the following:

The settings include adding: a cache sharing key, multicast address, and port which must be enabled in the firewall, optionally you can add the source IP of the server which will be sharing the cache and the port used, the shared cache TTL is the number of hops expected so set it to 1, and set the shared cache option to yes.

The same setting can be set on the other servers.

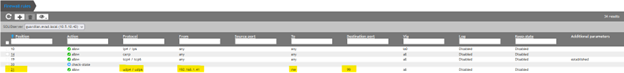

A firewall rule must be added as follows:

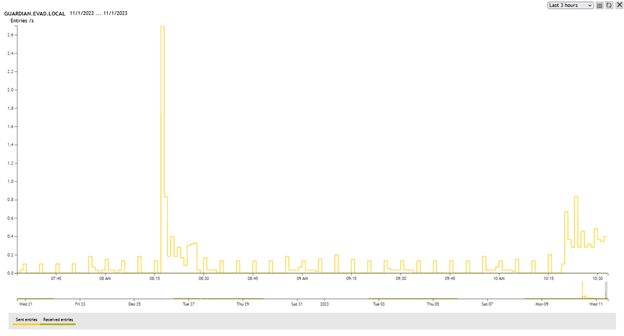

From the properties of the Guardian server, you can see the successful cache sharing:

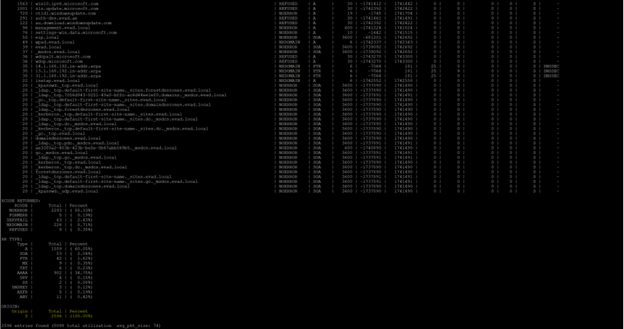

From the CLI you can view the cache entries that were shared by other servers:

DNS Blast> show cache origin=S

You can do cache sharing on demand using the following command:

share cache 192.168.1.41![]()